A daily of my robotics journey in SF, Summer 2025

What is this? Since the 4th of May, I've been working full-time on robotics. I use this space to collect my daily thoughts, document what I’ve worked on, and note down any questions that come up. Sharing this helps me stay accountable and reflect on whether my day-to-day efforts are effective in pursuing my goal of building a robotics company.

Only data collection

Thursday, 2nd of July

Today I collected 349 episodes of data. It was very monotonous and boring, but the ability to endure boring work is important for success.

Some thoughts from the day:

- I see how a limitation of imitation learning is that it's hard for the robot to become better than the demonstrations. For better results, robots presumably need to be able to do some form of experimentation themselves. Reward models might also be an interesting avenue: in this approach, a model (not the one controlling the robot) evaluates the video and assigns a score from 0–100 for each frame based on how close the robot is to successfully completing the task. This way, the robot could collect data itself and you could iteratively retrain the model using only the best samples.

- For my chess game, it would be good if the robot's camera were at the bottom rather than the top of the gripper. This way, the arm would maintain visual contact with the piece to be moved throughout the entire movement.

- It also makes sense to me that more cameras (>2) could be helpful for training precise models.

Recording an episode takes 15s + ~30–40s to encode/compress the videos. If I collect more data, it would be worth investing time to see if I could encode all videos in batch after recording them.

First policy results!

Tuesday, 1st of July

Instead of just collecting a thousand episodes and hoping for the best, I want to think more intentionally about how to maximise learning while doing it—and ideally create shareable insights for X. If I later write a blog post, it would be great to include useful learnings along the way.

One thing my thesis supervisor at OpenAI taught me is to always sketch the plots you want to show before writing any code. Following that advice, I drafted some plot ideas and outlined the data I'd need to collect to generate them.

Research questions I want to run experiments for:

-

Which policy performs best: ACT, Pi0-fast, or SmolVLA, across different dataset sizes?

100 episodes 500 episodes 1000 episodes ACT Pi0-fast SmolVLA (finetune) -

How does policy performance improve with training steps?

20k 40k 60k 80k 100k ACT Pi0-fast SmolVLA (finetune) -

How do data quantity and task diversity affect accuracy?

(All experiments use the best model from experiment 1)25% of tasks 50% of tasks 75% of tasks 100% of tasks 25% of data 50% of data 75% of data 100% of data

Writing this down, I realised that these experiments might take considerable effort, and questioned whether this is the best use of time - or if I should instead focus on the main goal: getting the robot to play a full game autonomously.

Pros of doing the experiments:

- They’ll help me build intuition for how well different models perform and how dataset size and diversity affect learning.

- They could result in a compelling blog post that’s valuable for others starting out in robotics.

Cons:

- It’s a detour that could slow down progress on the main objective, which risks losing momentum - something I’ve found crucial for maintaining motivation and output.

I decided to structure data collection so I can still run these experiments later, but first focus on getting the robot to play a full game.

After yesterday’s training failed due to insufficient Lightning AI credits, I topped up and resumed training the ACT model to 100k steps. While it was running, I evaluated the 20k checkpoint. Out of 30 attempts, it succeeded in 7 - roughly a 23% success rate.

That’s not great, but it does confirm that the model understands the visual hints (the overlaid circles) from the context camera - that was the main goal of this test.

The most common failure mode was the gripper missing the piece during pickup. I suspect the model overfitted to the exact chessboard position in training and couldn’t handle small shifts. The second most common issue was the gripper either releasing too early or not at all - something I expect will improve with more data.

I spent the rest of the evening collecting data by replaying the Kasparov vs. Van Foreest (2021) game. I controlled the black pieces and used the same colour overlays as in the earlier experiment.

I noticed two interesting things:

-

Captures require two policy runs: First to remove the opponent’s piece, then to move our piece into place. I’ll need a good strategy to choose the drop-off location for the captured piece - ideally somewhere close to the robot and not too close to other pieces.

-

Castling also requires two moves: My current visualiser only draws one arrow, so I’ll need to update the logic to handle two.

Also, my piece detection model struggles most with queens and kings - likely because they appear less frequently and there’s less training data for them. I wonder if the same issue will arise in the action model, since some pieces are moved less often. Playing more endgames could help balance this out.

Getting to 80% accuracy might not be too hard, but hitting >95% - let alone 99% - will be tough. In the Kasparov game, there were 42 moves total, plus 12 captures. So 54 actions are needed. At 99% accuracy per action, the probability of a flawless game is only 58% (0.99^54).

Damn. That’s going to be hard. But also exactly why I’m doing this: to reach the hard parts of robotics and develop a concrete understanding of what it really means to build capable robots.

Tomorrow’s focus: more data collection.

Writing these words just before midnight, I can’t help but smile - each week I spend working on robotics strengthens my conviction that this field will be revolutionary within my lifetime, and is something I want to go all in on in the next few years.

Modifying data recording script, collecting 100 episodes, first training run with ACT

Monday, 29th of June

Today was a really good day. I implemented a new version of the data collection script for the SO-100, where the user can click on an image from the context camera’s view to add a red circle indicating the piece to be moved, and a blue circle indicating the target square.

To test this method, I used a simple example: two rooks set up on the chessboard, each meant to be moved two squares forward. The challenge is that the model needs to infer which of the two should be moved. I collected 100 episodes—50 for each piece—which took up half the day. Below is a screenshot of the coloured overlay. You can view the full dataset here.

![]()

I then reviewed the episodes for quality, deleted the ‘bad’ ones using Ville’s Pikodata, and merged the datasets with Phospho. After that, I started training an ACT model. This last step took longer than expected because: a) uploading the merged dataset was slow, b) I had trouble starting the training run—turns out it requires an unexpectedly large amount of RAM (~60 GB!) despite a batch size of 8 and each episode lasting only 15 seconds, and c) I ran into issues with Wandb tracking. There was no clear error message—just a timeout during initialisation. It turned out the issue was caused by trying to assign a run name that conflicted with a previously deleted run. This didn’t cause a proper failure, just a silent timeout.

Working through the LeRobot code

Friday, 28th of June

I spent the morning reading the first five blog posts of Benjie Holson’s General Robots Substack. He was laid off from Google Robotics during the recent restructurings and now shares his thoughts on building robots. I enjoyed it a lot and strongly recommend it.

I also read this blog post by Dyna Robotics. Unlike Physical Intelligence, which works on general-purpose robotic arms as a pure research company, Dyna focuses on making single use cases work. This approach makes much more sense to me - it’s clear that we’ll need significantly more data than we currently have for general-purpose robotics, and it’s still unclear which data modalities will get us there. World models, simulation, or egocentric video don’t yet seem good enough to close that gap anytime soon.

By prioritising working use cases over pure research, Dyna can generate revenue early while positioning itself to scale once better data modalities and model architectures emerge.

What’s remarkable about Dyna’s robot is its long-duration performance: it ran 24 hours straight, folding over 850 napkins at ~60% of human speed with a 99.4% success rate - zero interventions. This is exceptional. The blog post explains, at a high level, how they achieved this: they train a model to estimate how well the robot is progressing toward completing a task. As I understand it, they then take the successful episodes and use them as training data for further iterations, allowing the model to learn from its own experience. This builds on research by Jason Ma (CTO of Dyna) at DeepMind, detailed in this paper.

![]()

In the afternoon, I revisited the LeRobot code. My plan is to train an ACT-based method to move objects (highlighted with a red circle) to a target location (highlighted with a blue circle). To do this, I need to record data with camera input augmented by these visual cues.

The code for dataset recording and policy execution is in the record.py file of LeRobot, which contains a robot.get_observation() method. My plan is to:

- Create a copy of

record.py. - Build an interface that lets the user click on the image to place the red and blue circles before recording starts.

- Integrate this into the high-level control loop of my chess application so that the visual cues are generated automatically during data collection.

Research on robotic arms & Hugging Face hackathon winner demos

Thursday, 27th of June

I spent the day researching robotic arms, mainly from Trossen and Arx. What stood out was how prominently Trossen advertises its integration with LeRobot on their website. It made me wonder whether LeRobot might become the standard interface layer in robotics over the next few years.

I also came across this video by Trossen showcasing their LeRobot integration. I found it especially helpful because I’ve been curious about how big the leap is from working with the SO-100 to using a Trossen arm. The video gave me the impression that much of what I’m learning with the SO-100 will transfer directly.

Most of the afternoon went into reading through the LeRobot codebase.

Later, I watched the demo videos of the top 30 winning teams from the LeRobot hackathon. As someone new to robotics, it was inspiring to see the range of ideas, how much can be built in just a weekend, and the general research directions people are exploring. Highly recommend checking them out.

In the evening, I had dinner with Jannik Grothusen, solo founder of the Robot Learning Company. It was interesting to hear his perspective after several years in the field. While he strongly believes in robot learning he also thinks that the current hardware is overpriced and suboptimal for robot learning which is why he's planning on building a binmanual robot learning dev kit cheaper than what you can buy from Trossen or Arx.

Webcam Integration and the Long Tail of Labelling

Wedndesday, 26th of June

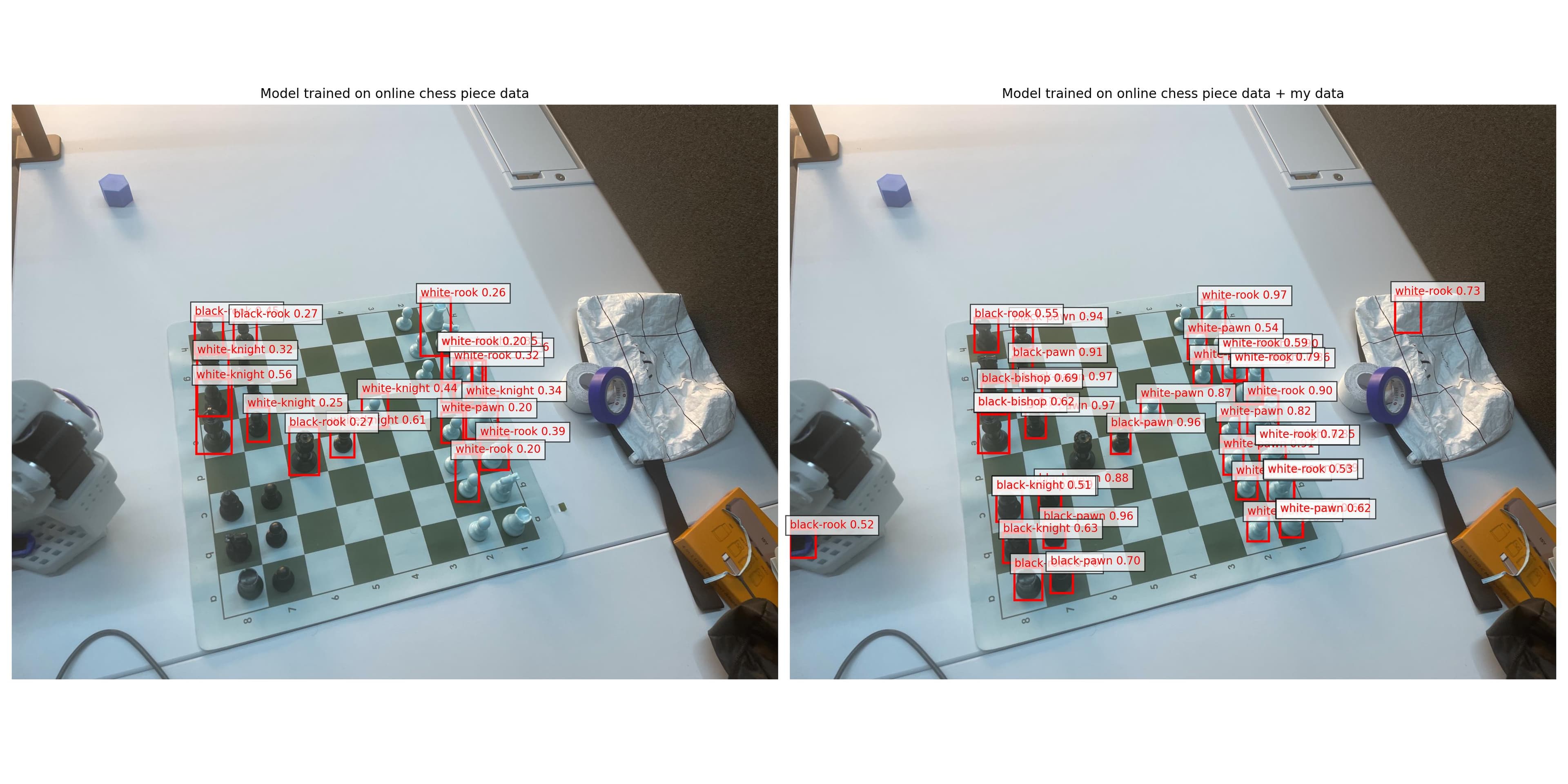

Today was a really productive day. I added a camera module that reads from a connected webcam and integrated it with a new script that streams the chessboard and displays the detected board in real time. While running the script, I noticed that the main bottleneck in the chess robot pipeline is still board segmentation and piece detection.

To make data collection easier, I added a script that streams the webcam feed to a desktop window and allows the user to save every n-th frame. This makes it possible to move pieces around and automatically capture images, instead of manually taking photos with a phone. It’s also the first time I’ve added webcam-based data that will actually be used in the final application.

I labelled around 50 additional images for both segmentation and piece detection, and retrained the models. However, I’m still not satisfied with the results - it looks like I’ll need significantly more labelled data.

I also spent some time exploring the LeRobot codebase, read through the example scripts, and calibrated my arms. I found the simulation script particularly interesting, as I haven’t worked with robotic simulations before, even though I know it’s a major part of the field.

Over the next few days, I want to focus more on the robot code - reading more of the LeRobot internals during the day - and continuing to label data for the pieces in the evenings.

Adding chess board orientation, next move prediction and varying computer strength

Tuesday, 25th of June One major missing piece was enabling the computer to determine the correct orientation of the chessboard. Without this, the PFN - and therefore the next move prediction - would be incorrect. I considered ways to detect the orientation automatically, but since it’s impossible to infer it reliably at all stages of the game, especially in the endgame, I decided the user will need to set it manually.

I also integrated Stockfish to predict the next move and made the engine strength configurable, so you can practise against the robot at different difficulty levels. Ideally, it could also provide verbal feedback on your moves - but I’ll leave that as an optional feature for later.

Finally, I tidied up the readme of the repo.

![]()

Reshuffling chess analysis code

Monday, 24th of June

The chessboard analyser (the computer vision part) is working well overall, but I ran into a few issues, most notably, pieces were sometimes being assigned to the wrong squares, despite being correctly detected with bounding boxes.

Last week, I relied heavily on Cursor’s agent mode, which eroded my intuition for the overall code structure. As a result, it became hard to reason about what was missing or going wrong. To address this, I spent today introducing a clean dataclass structure that represents the full state of the chessboard, including intermediate results. Implementing this required changes across the codebase, which helped rebuild my understanding and led to partial rewrites.

I’m still unsure how much of a speed-up agent mode actually provided. My current takeaway is to only use it for making targeted changes, and to avoid delegating structural decisions to it.

Previously, piece-to-square assignment was based on whether the centre of a bounding box fell within a square. However, this approach proved unreliable, especially for taller pieces like the queen and king, because the camera isn’t positioned directly overhead. In those cases, the centre point often landed in the wrong square. I’ve now changed the method to use a point located at one-quarter of the bounding box height, which seems to give more accurate results.

Here's the current status quo:

![]()

YC AI Start-up School week

Monday 16th of June to Sunday 22nd of June.

I attended Y Combinator’s Startup School. The two-day conference had an impressive line-up of speakers, but most of the talks didn’t offer much beyond what the speakers had already shared in previous appearances.

What stood out most to me was Chelsea Finn’s recap of Physical Intelligence’s work. While it was essentially a condensed walkthrough of what’s already available on their blog, it was still valuable to hear her personal framing and emphasis. It was also interesting to pick up on some of her views - like her current lack of enthusiasm for data sources beyond teleoperation.

![]()

Another good talk was Andrej Karpathy's meta-talk on LLMs and interacting with them. Karpathy introduces a lot of analogies and mental models for how to think about LLMs and designing software around them.

The highlight of the conference, though, was meeting other attendees - especially fellow Europeans. Being there acted as a strong filter for both technical depth and startup interest, a combination that’s sometimes hard to find at ETH. I spent the rest of the week reconnecting with people one-on-one, hosting an ETH alumni event, meeting a friend at Founders Fund, and organising a dinner party for Germans in the Bay Area.

Midweek, I found myself frustrated about not getting much actual work done. But after jotting down some thoughts in my notebook, I realised that this - meeting thoughtful, like-minded people - was exactly what I’d been missing in the months before. Many of these early conversations felt like planting seeds for long-term relationships.

Segmentation model working

Friday, 13th of June

Spent more time setting up segmentation model training and inference, tidying up code and adding a class and CLI script to run inference on images easily. I will also use this class later in the main control loop for the chess robot to repeatedly run inference and store the state of the entire application. Here is a look at what the model is able to infer:

![]()

Working on LeRobot code because of GCP outage

Thursday, 12th of June

I worked on copying the segmentation model that I trained from roboflow yesterday. GCP had an outage that outage that affected Roboflow so I couldn’t download my data.

Instead I spent time working through the LeRobot code and started taking notes in Notion to retain more knowledge and prevent going back and forth through the code in the future.

I structured my notes in Notion again and thought that I should keep my daily notes blog up to date. It might be very useful in a few weeks to review my thoughts and thought process. It’s also a treasure trove of links and resources. It could also be useful as a huge context of thoughts that I can feed into an LLM and discuss ideas.

I read Rerun’s blogpost on their 17m Series A. They’re ~50 team members, based in Stockholm and as I understand it pre-revenue. What I found interesting about them is the idea that they picked the most “boring” but critical part of building robotics (data management) and are building a solution to it. It’s a bit like the common start-up advice to build for a boring industry, but applied to the boring part of a hyped technology.

Moving from Edge Detection to Segmentation

Monday-Wednesday 9th-11th of June

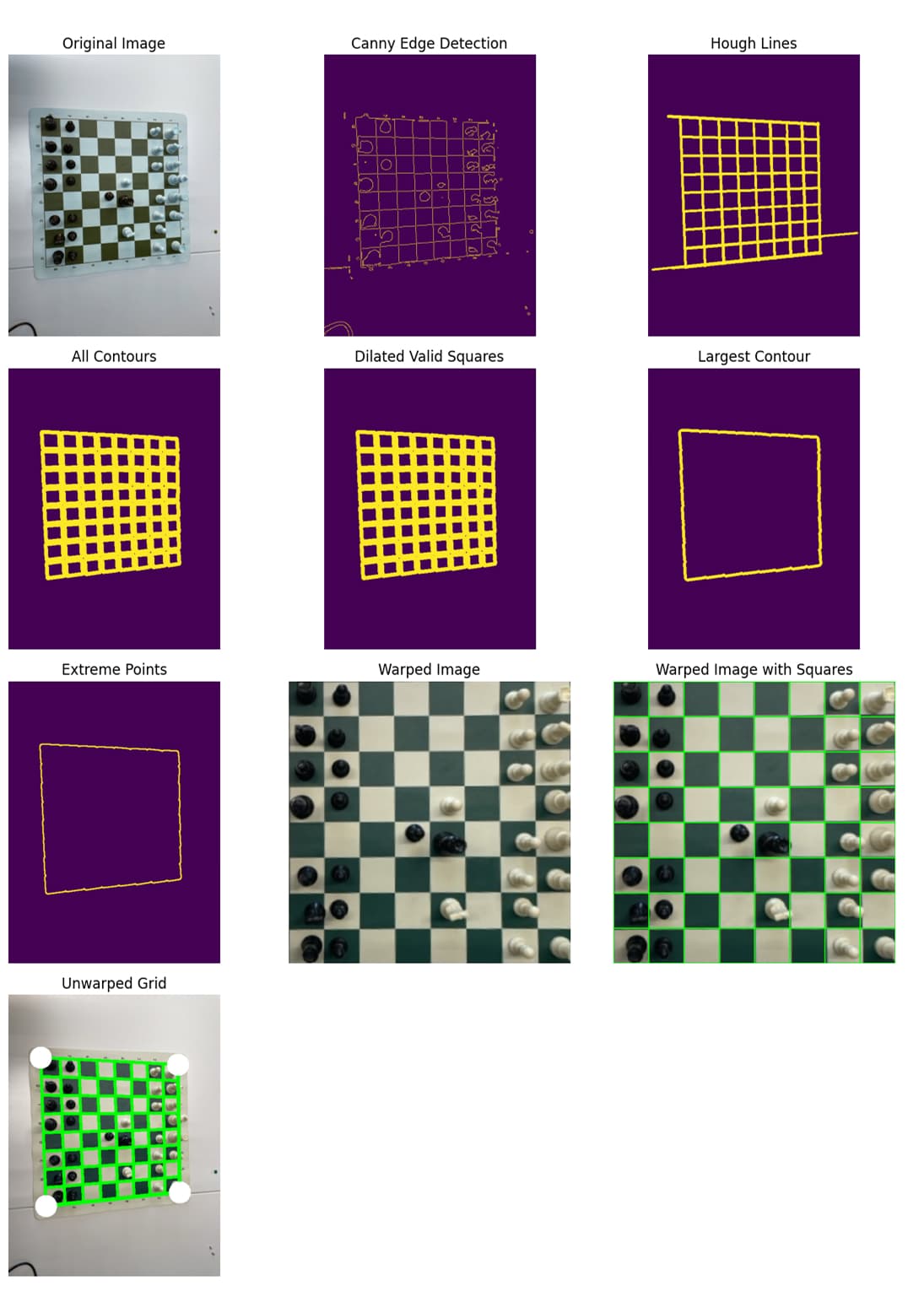

I encountered issues with board detection using the traditional computer vision method (no ML). The edge detection didn’t generalise well across images and especially struggled with (a) varying distances of the chessboard from the camera, and (b) pieces hiding the edges of the board. Below is an example where it works well and a second example showing how a slight change of angle & figures can mess things up. Note how slight deviations in the corner detection can lead to a misaligned grid and hence a very bad detection of the pieces per square.

![]()

![]()

So instead, I tried detecting the contours of the board using a YOLO model that detects polygons (not bounding boxes). That didn’t work very well. Next, I explored detecting bounding boxes of the chessboard and then applying the Hough Lines method (traditional CV), but that approach became messy. At this point, I started wondering whether segmentation might work better with my small dataset.

I spent a night labelling data for both bounding box detection and segmentation, and trained Roboflow models for both. I trust that they are running all possible optimisations in the background, so paying them $60 for this felt like a good use of money to save time.

The results showed that segmentation actually worked quite well, so I wrote code to train a model locally in order to (a) make my code fully open and usable by others, and (b) enable easy retraining of the model in the future without needing to pay for a Roboflow subscription. One thing I noticed during Roboflow’s training process was that they used checkpoints pretrained on large datasets. I incorporated this into my own training code as well.

Going to bed, I questioned whether spending the entire last week on the diffusion course was the best use of time. It’s hard to say. On the one hand, doing this seems to go against the usual advice of staying super focused as an entrepreneur. On the other hand, I think it’s also important to understand things deeply - especially if robotic ML models will be at the heart of the company - and my opportunity cost of doing this now may never be as low again.

Finished the Diffusion course

The week of 02.06. to 07.06.

I spent the week working through the course. This took longer than expected, but it was very useful in developing an intuition of flow matching and diffusion models.

I also found interesting statistics on robots in Europe: https://www.bruegel.org/blog-post/growing-presence-robots-eu-industries

Meta released its V-JEPA 2 model and paper. V-JEPA is a world model (physics-grounded video generation) and therefore relevant to robotics.

Diffusion course lecture 2

Saturday, 31st of May

Worked through lecture 2 of the diffusion course. Putting lecture notes into ChatGPT is a game-changer for the speed of learning. I wish I had had this during my master’s.

Problem set 1 for the diffusion course

Friday, 30th of May

- I started working on the first problem set of the Introduction to Flow Matching and Diffusion Models course. I initially expected the course to take about 3 days, but it's looking more like 5–6. Still, I think it's a worthwhile investment — building a solid understanding of diffusion models is important for where things are heading.

- I had lunch at the European Founders Embassy, then visited Founders Inc at Fort Mason, where I met two robotics founders, Ali Agha and Pierre-Louis Soulie. It’s an interesting space — an old military barracks — with a high density of robotics teams.

- I also ran into the team behind this robotics demo — a mini factory in a glass box that automates electronics assembly. They had been demoing it the day before, and it was great to connect in person.

Detour start: 6-lecture course on Diffusion and Flow models.

Thursday, 29th of May

- The next subsection in the AI for Robotics book covered diffusion models. While I understand the basic idea behind them, I still don’t grasp them at the fundamental level I’d like to. This has bothered me occasionally — but consistently — for the past two years. Diffusion models keep appearing in more domains: first images, then video, then proteins, and now I’m seeing how leading robotics models are increasingly based on diffusion as well.

- Two months ago, I came across a course titled Introduction to Flow Matching and Diffusion Models by Peter Holderrieth and bookmarked it. Now, during my first week in San Francisco, I’m in a good headspace and not feeling rushed. I’ve decided it’s a great time to finally work through the course.

- This is admittedly a detour from a detour — reading the AI for Robotics book instead of making faster progress on the chess robot — but I’m confident that a deep understanding of diffusion models will pay off significantly over the next few years. It’s the right kind of decision.

- I also came across this demo on X, showing a robot automating repetitive manual tasks, starting with electronics assembly. It’s an interesting concept. However, an even more compelling approach might be to begin with teleoperation, especially by workers in threshold countries. This would eliminate the need for model training at the start and take advantage of wage arbitrage. Over time, these teleoperated sessions could generate large-scale interaction data, which could be used to train task-specific models. As performance improves, less teleoperation would be needed due to emerging generalisation capabilities. Eventually, this data could support training a foundation model capable of zero-shot generalisation across a wide range of manual tasks.

More progress on the AI for Robotics

Wednesday, 28th of May

I continued reading the Robotics for AI book, covering pages 120-220. The chapter after point clouds focused on robotic foundation models. I found the following interesting in particular:

- I was already familiar with the Kaplan et al. scaling laws paper, but this section introduced me to the Chinchilla paper and its replication studies, which refine our understanding of compute-efficient scaling. They show how to optimally allocate data and model size for a given compute budget.

- The paper Scaling Data-Constrained Language Models had a few striking takeaways:

- Training on 4 epochs of a static dataset performs about as well as training on newly collected data.

- Training beyond 40 epochs yields negligible gains.

![]()

- Sorscher et al. showed that typical scaling laws (training loss vs dataset size) can be broken through curriculum-style pruning:

- In data-rich settings, pruning easy examples improves performance.

- In data-constrained settings, pruning hard examples is more beneficial.

- This challenges the idea that "more data is always better" and emphasises the importance of data difficulty and curation strategy.

These two robotics papers sounded interesting:

- SayCan: A two-stage framework: The overall execution is brittle - failure in any one skill causes the entire task to fail.

- An LLM estimates whether a proposed decomposition into low-level skills is likely to succeed.

- It also predicts the success of each individual skill primitive given the robot’s state and environment.

- Inner Monologue: Builds on SayCan by adding feedback and retries. The LLM reflects on whether a subtask was successful and can reattempt it, allowing for a more robust closed-loop system.

I noted these papers down to maybe have a look at later

- NLMap SayCan

- Language to Rewards for Robotic Skill Synthesis (L2R)

- Code as Policies

- How to Prompt Your Robot

- End-to-End Training of Deep Visuomotor Policies

- Open X-Embodiment: Robotic learning datasets and RT-X models

- Cross-Former: Scaling Cross-Embodied Learning across Manipulation, Navigation, Locomotion, and Aviation

- Physically Grounded Vision-Language Models for Robotic Manipulation

Random Idea I had: I’m curious whether you could bootstrap robotic learning by starting with a basic pre-trained skill (e.g. "pick up a ball and place it in a basket"), then use an LLM to generate small task variations (e.g. place it on a shelf instead), and evaluate whether the outcome was successful. This could provide self-supervised training signals and gradually expand the robot’s skill set without manual labeling.

A bit sick and learning about point clouds

Tuesday, 27th of May

- I tried continuing to read the book AI for Robotics but couldn't concentrate because of my headache and light fever. The next chapter would have been about LiDAR and methods for analysing point clouds, so I watched this talk instead. It was mostly interesting to learn about the typical problems related to point clouds, namely, how to identify objects via segmentation, object classification, surface detection, and generating point clouds (e.g. 3D avatars) from a prompt.

- I talked to an investor whom I was introduced to and who helps connect cofounders. I also met Julian (founder of codarobotics.ai), whom I met via X. He is trying to build a world model and sell it to robotics companies for evaluations.

Flying to San Francisco & Started Reading "AI for Robotics"

Monday, 26th of May

- I'm moving to San Francisco for two months where I'll continue working and learning about robotics together with five other friends in a hacker house.

- I read the first 120 pages of AI for Robotics** on the plane. The book is less of a full guide for how to build robotic foundation models, but provides a medium-depth overview of the subfields feeding into robotics and the most important research in each of them.

Better chess piece detection & code cleaning

Thursday, 22nd of May

- I had 50 more images labelled, and the new model works much better. Not perfect yet, but I can now use this data to annotate new data even faster.

- I cleaned up the code for downloading and cleaning the data to make it reproducible and also to make it easier to wrangle the code if I lose files.

Board detection

Wednesday, 21st of May,

- My labeller finished annotating the first 60 images of data. It’s not 100% perfect yet, but it looks promising and is good enough to wrap a first version of the model into a workflow. Ishrat, my labeller, will continue helping me annotate more data. I paid €50 to get 60 images annotated, and it’s definitely worth the time it’s saving me. Someone on X suggested using SAM to do the labelling automatically. I’m curious whether that would work, but for now, manual labelling is working well, and I don’t want to lose too much time experimenting. I also found more datasets of chess pieces on Roboflow. If I want the model to generalise across different board types, merging these datasets to train a unified model could be interesting.

- Detecting the chessboard: I started working on detecting the individual tiles so I can build a FEN/PGN representation of the game. This blog post was very interesting. After rewriting parts of the code, I’m starting to get some good results!

Chess piece detection

Tuesday, 20th of May

- I'm flying to SF on Monday. I'm not sure whether collecting data here makes sense if I cannot use it in SF initially. So the obvious part to work on this week is 1) reading out the chess board and 2) feeding it into a chess engine to get the next move. I'm going to train a simple YOLO model to detect the pieces. I looked into existing datasets and found this one on Kaggle. I trained a YOLO model on it, but it doesn't transfer well to my data. I need to train on more of my own images, maybe playing 1-2 games and taking positions of every other position from different angles will do. I'll use a freelancer I worked with before from Upwork to do the labelling. I looked at labelling platforms and decided to go with Roboflow (which is more of a model training company) to annotate the data, as their interface is fast and downloading the images with labels is easy too. Below is a picture of the predictions of a model trained on a different dataset applied to one of my pictures.

- I met Simon in person at the office today - we originally connected a few weeks ago through my posts on X. He’s visiting Berlin and wanted to see the SO-100 robot I built. His family runs a precision steel casting business, which is exactly the kind of company - a European manufacturing SME - that could be my future customer.Talking to him was incredibly valuable: we discussed how companies like his approach their processes, how much robotics they already use, where they struggle, and what they’re looking for. Simon is a founder himself and is considering building his next company in robotics. He’s one of those rare people who deeply understands both manufacturing and startups, which made the conversation especially insightful.He was also very kind and invited me to visit their factory once I’m back from SF - and even offered to introduce me to other companies in the region. I have no connections to SMEs and so I’m especially happy to have met Simon!

Back in Berlin & work on Substack

Monday, 19th of May

- I spent most of the day working on my Substack post about what I’m aiming to achieve during my one-month robotics sprint. Writing it all out in one continuous piece is an interesting challenge. It’s forcing me to connect ideas I’ve considered individually but never really thought about they connect.

- In the afternoon, I talked to a friend who’s trying to build rockets - actual rockets, trying to compete with SpaceX and Isar Aerospace. Then in the evening, I met two other friends, both with robotics backgrounds, but now working on AI agents. The first conversation left me way more optimistic about my own ideas than the second.The rocket friend made the impossible feel exciting. The robotics guys were encouraging but repeated how hard robotics is. They're not wrong. But something about the way they said it made the whole thing feel… heavy.I’ve noticed this before. Some conversations give you energy. Others consume it - not maliciously, but by being realistic in a way that starts to feel like inertia.Are those energy-giving conversations just sugar highs? Maybe. Doubt might be more accurate, but it’s rarely more useful. Especially when you’re still in the phase where the only way to find out is to keep going.

London

Thursday, 15th of May

- I'm trying to understand whether I want to move to Paris or London after the next two months in SF. A friend is celebrating her birthday and that was a good occasion to fly here.

- I met two VCs whom I had met before. One is an older friend working a Series A & later VC who I wanted to see again, the other is an early-stage VC that I had met only once before and who I want to get to know a bit before I'm in a position where I'm fundraising.

- Talk: Connecting Robotics and Foundation Models by Google robotics. Discusses several of their recent papers.

- They're taking a very different approach from Physical Intelligence and are doing more work where the robot plans its tasks explicitly.

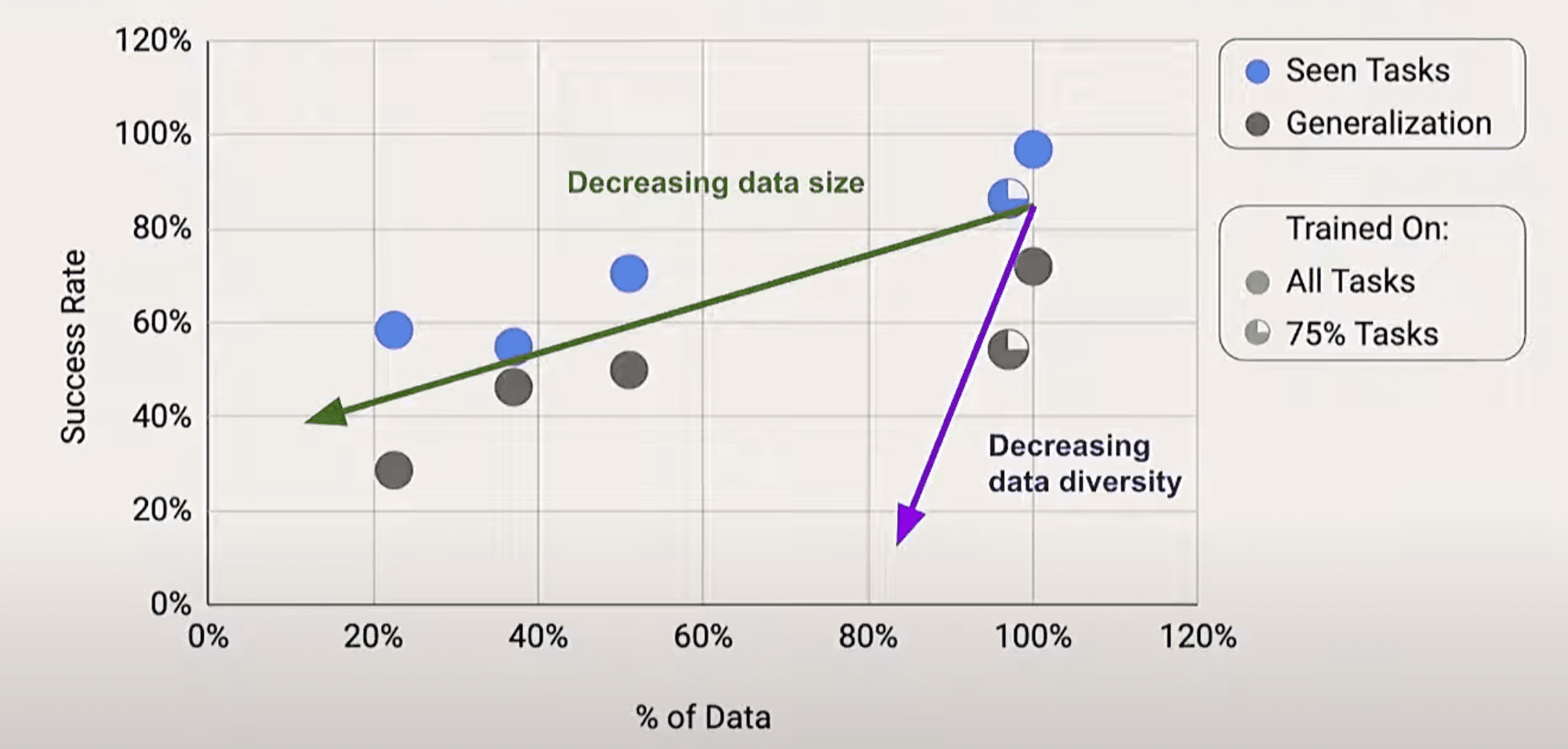

- Diversity of data once more trumps quantity: A model trained on 75% of the available tasks performs approximately as well as a model trained on 50% of the data.

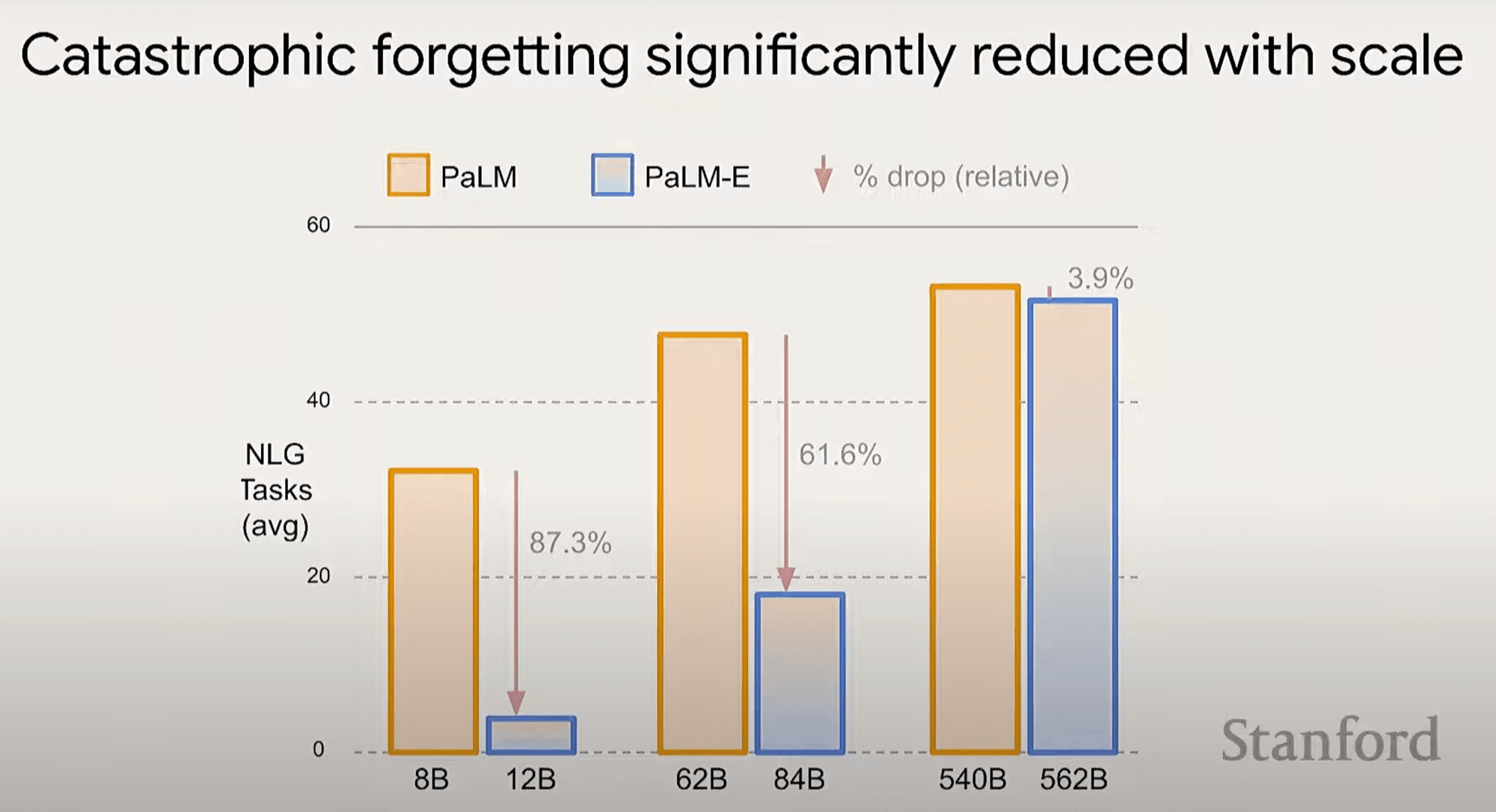

- Larger models are less likely to exhibit catastrophic forgetting when trained on new data.

- Talk: Bernt Børnich from 1x Robotics. He mentions the importance of data and connecting this with the last talk and the importance of the diversity of data I wonder whether household robotics with humanoids is so interesting to many companies is because of the data diversity you could collect there.

Symposium Münster

Tuesday & Wednesday, 13th & 14th of May

- I was invited to speak for 45 minutes about recent developments in AI and join a panel. I enjoyed giving a speech with a call to action to start building. You can find the slides here if you're interested. I had agreed to speak a long time ago. After considering how much time this took, I'm not sure if I'd do it again.

Monday, 12th of May - Day off

Zurich Robotics Hackathon

Friday to Sunday, 9.-11. of May

- We were a team of four and attached a SO-100 arm to a Unitree Go2 Quadruped with the ultimate goal of having it retrieve objects in the room. We trained an ACT policy on picking up a tin can and a roll of tape (~60 episodes per item). This worked with ~60% accuracy. We used OpenAI to perceive the environment, interact with the user via voice and to trigger the ACT policy when it detected that it was close.

- You can find our demo here: https://x.com/gdb/status/1921963245071475107 Greg Brockman, president of OpenAI, retweeted it, which I thought was super cool!

- I had to dig much deeper into the LeRobot code and learnt a lot. I want to spend way more time reading and working with the lower-level code, not just the higher-level API, going forward. It was really cool playing around with the Unitree Go2 and the Unitree humanoid. Also, I again met some very cool people. Interestingly, while each project worked at some point, the demos of all six teams failed in the final presentations!

- Flying to Zurich on Friday, I watched the videos from Sequioa's AI ascent. I particularly liked Jim Fan's talk on the future of robotics and Bret's talk on agents.

Moving from Phospho to LeRobot code & market research

Thursday, 8th of May

- Today, I wanted to understand the robotics market in terms of size and growth, and in particularly how that compares to the AI market. I created a table of current market size, CAGR and geo split. I'm not sure how to think about market size estimations and how much they should guide my thinking.

- Previously, I've used Phospho, an interface, to use the SO-100. It saves you a lot of time, but also hides a lot of the complexity. It was great to get started, but I want to write my own code and make modifications myself. To this end I need to use the LeRobot code from HuggingFace. It's going to be a bit of work to dig into the code base, but I already sense it will be very useful to learn about how to write interacting with a robot.

- I also recorded a new dataset with (1) a context camera and (2) red tape on the SO-100's claw to hopefully make things easier for the ML model. I used Phospho to train a ACT model but it crashed. These feedback loops are taking too long. I want to train multiple models on my own GPUs concurrently and also need more control of the interface. Another reason to move to the LeRobot codebase.

- One shortcoming of the SO-100 IMO is its short reach (~40cm) and low lift (~300g). I've been keeping my eyes peeled for which robot would be the next hardware level with more capabilites. Reading through the LeRobot code I now found out about the Koch v1.1 (building on the original version by Alexander Koch). It costs approximately 668€. I thought this might be an upgrade to the SO-100, but the reach looks similar and as for lift I might as well upgrade to the stronger servo motor for the SO-100. This is a cool demo though. The next level beyond the SO-100 probably is the Agilex PiPER (2,699€) as I understand it.

Wednesday, 7th of May - Day off

Pi0 paper, ACT model

Tuesday, 6th of May

- I found this project where someone built two robots playing chess against each other. He seems to be using digital markers on the video, together with an ACT model, to teach the robot where to lift the pieces. This is the second SO-100 chess project I'm seeing, in addition to this one.

- The ACT model I started training yesterday crashed. I restarted a new training run. Somehow this model also isn't working. Next step: Use context camera and try training a model diretly via LeRobot instead of Phospho. I'd like to be able to debug better by seeing the models predictions and being able to verify that it has access to the camera. Phospho is cool, but features are a bit limited at the moment.

- I read the π0 paper from Physical Intelligence. The most interesting things I learnt were:

- To train robotic foundation models, you actually want to pre-train on very diverse and 'messy' data, including mistakes of the robot and how it recovered from them. You need this to teach the robot how to recover from small issues. You then want to fine-tune on fast, clean execution data to teach the model how it should execute in an ideal case.

- LLMs are enabled by internet-scale data to train them on. Nothing of the like exists for robotics. Data collection is a huge moat for robotics companies. I wonder if a platform brokering training data among robotics companies would be a viable business? Physical Intelligence used 10,000 hours of pre-training data from 7 robot configurations and 68 tasks. That's 3.5 years of data collection if you assume 8h per day. Further reinforcing the importance of data is that they actively ask for companies that can collect data to reach out to them.

- Their key results are (a) pretraining on diverse data improves performances in all but one eval case. (b) fine-tuning for too long on a task can actually lead to performance degradation. (c) the model does bot have any 'emergent' properties yet in the sense that it has 0% success on tasks that it hasn't seen yet (d) by breaking a task into smaller subtask prompts the model achieves better overall success rates.

- Idea: Virtually every user working with the SO-100 uploads their data to Huggingface, where it's publicly accessible. It would be interesting to copy the approach of the π0 paper and extend a VLM to train on this data and evaluate whether this leads to faster learning of a task.

Aloha paper + new chess recording

Monday, 5th of May

- I read the ALOHA paper, which states that part of its contribution is the development of a low-cost arm for 20,000€. This struck me as extremely expensive. My SO-100 costs 200€ for a leader and a follower arm, and I was expecting the next quality level to be more like 2000-3000€. But the cheapest Universal Robot arm costs 23,500€ and robots by Franka are not that different. How are they so expensive while Unitree's humanoid costs 16,000$? It seems like many robotics start-ups are building their own arms to keep costs low, such as Tau Robotics or AQL Robotics. The cheapest arm I found was the Agilex Piper for 2500€

- I decided that by the end of the month, I want to have written a robotics 101 guide summarising everything I learnt. I started the post, did research on some robotic arm producers and researched the founding year and total funding of some of the big robotics start-ups.

- The model I trained yesterday doesn't work either. I collected another dataset of 50 e2 -> e4 pawn moves as I moved office today, and I want to rule out that the cause of the error is the change in lighting. The new model still doesn't work. It goes through the motions, but doesn't open the claw or touch the chess pieces. After asking on the Phospho Discord someone suggested that (a) I might be moving too fast in the training recordings (check out a recording here) and (b) there might be an issue with the contrast of the gripper and the chess piece. This leads to believe that I may have overestimated the ability of Gr00t and robotic foundation models as a whole. Next I will train an ACT policy to see whether that works better.

- Ordered a webcam as I hope a second video source will help the ML model learn, as well as grip tape and a mount for the camera on the table.

- Defined goals for this week:

- Learn how to train an Act, Gr00t, and Pi0 model with the SO-100 arm and do so with a toy example.

- My understanding is that the three methods above are the three big archetypes of robotic learning. I want to read the three papers, understand their differences between and summarise this in my robotics 101 blog post.

- Gather ideas for what I want to build this weekend at the robotics hackathon in Zurich.

Questions I developed today

- Will I have to build my own robot to iterate at a low cost, and have a robot that is a bit stronger than the SO-100? Robots from companies like Universal Robots and Franka are really expensive!

- The Aloha paper uses four cameras, two of which are on the grippers and another two which are filming from the top and the side of the table. How important is the number of cameras for good training results?

First training with the SO-100

Sunday, 4th of May

- I trained a simple Gr00t model to move a pawn from E2 to E4 using 50 samples, but it's not really working yet as you can see here: https://x.com/DominiqueCAPaul/status/1919029034895167952

- I found out that I wasn't training for long enough and that that might be the issue. I started with 10 epochs, then extended to 25, but without success. Starting a training run with 50 epochs overnight now.

Friday:

- Found these tuning tips for ACT model: https://docs.google.com/document/d/1FVIZfoALXg_ZkYKaYVh-qOlaXveq5CtvJHXkY25eYhs/edit?tab=t.0#heading=h.2xiz3mdijyv4

- Realised that ACT and Diffusion policy might not be training for long enough

Thursday:

- Evaluated models that i started training yesterday -> They are shit

- Looked into diffusion model paper and kicked off a training. Datlaoader very slow.

- Created issue on LeRobot for this (also for SmolVLA)

- Thought about working at a company like Physical Intelligence

- Talked with Elvis, caught up with a friend and went to a disappointing robotics mixer. Learning: Just have 1-on-1 conversations with people over call.

- Thought about working more on GVL, but not sure how it helps me with the chess robot

- I wonder what kind of loss the different models (SmolVLA, ACT and Diffusion) use. Can i compare the loss curves compared to number of samples seen?

Replicating the Generative Value Learning Paper

Wendesday, 10th of July

I collected the last episodes this morning and then went through the data to make sure that there are no errors in the data that the model tries to learn on. I then merged the datasets to one using Phospho and am now waiting for the upload to Huggingface to complete.

In the meantime I read the paper Vision Language Models are In-Context Value Learners which the founder from Dyna wrote during his PhD and is building on at the company. The paper proposes a method called Generative Value Learning (GVL), which uses a frozen vision-language model (VLM) to assign progress scores (between 0 and 1) to video frames of a robot performing a task. Unlike naive prompting, which produces uninformative monotonic scores due to temporal biases, GVL shuffles video frames and treats value prediction as a temporal ordering task, forcing the VLM to reason more deeply about task semantics and progress.

These frame-wise scores can then be used for:

- success detection (e.g. to filter out failed trajectories),

- dataset quality estimation,

- and advantage-weighted imitation learning — enabling robots to autonomously learn from their own experience, without requiring external reward labels or human supervision.

This is what it look likes in the Dyna research blogpost:

This is from an experiment I did comparing the Gemini models and GPT:

Using GPT for this is really expensive though! Creating 5-10 of these videos costs 2$! One thing that I haven't implemented yet is a in-context example with frames of the task, this might make the lines significantly smoother.

Why am I doing this? While I hope that the model trained on 1500 samples will be significantly better than the model trained on 500, I am certain that it wont't work with >90% success. I've been thinking how I could get there (except collecting more data). Applying this method to data collected by the robot autonomously could be an interesting avenue.

Also, I enjoyed listening to this podcast with the Karol Hausman, CEO of Physical Intelligence, and a staff researcher and highly recommend it.

Collecting 1500 episodes

Monday & Tuesday, 8th/9th of July

I spent the days just collecting data. The changes to the recording script HUGELY improve the time it takes to collect data. What took me 1h before is now 20m of data collection and 40m of processing time where I can do something else which was continuing reading the General Robots Substack which I also highly recommend.

I also had some time to think and summarise a few learnings for myself:

- Don't save on webcam: I bought a 60€ webcam instead of the Logitech C992 which was used in the Aloha paper and was the camera I was originally considering. The cheap camera I bought is very light sensitive and in normal indirect daylight the white pieces and parts of the chess board are overexposed which I imagine doesn't help the learning process.

- Regularly look at your own data and figure out whether its optimal for learning: I noticed the camera on the arm of the robot is not focussed (you can adjust that manually). Maybe it will add a bit to the robustness of my model, but given the same amount of data more blurry parts of it will be detrimental to quality.

- If you build a robot, try to keep lighting as constant as possible: I noticed in this Dyna demo what lengths they were going through to keep the lighting constant, but looking at my data I notice how many variations in brightness my data has, depending on the time of day. I initially underestimated the number of samples I'd need to get a decent version working (I'm not sure whether 1500 episodes will produce an OK model) and thought that it would be cool to have a robust model that can work in many different settings as this would be 'closer to a real-life' setting. True - but also very painful. Again, I underestimated how many samples it would take and I'd be happy to have a model that somewhat reliably works in ONE setting.

Goal Setting for the Week & Augmenting Script for Faster Data Collection

Sunday, 6th of July

My big hypothesis developed from last week is that I most likely need way more data. I want to disprove or confirm this hypothesis as quickly as possible. I planned the week ahead and set three main goals:

- Collect 1,100 episodes of data and retrain the ACT & SmolVLA models

- Annotate 300 images for the chess piece detection model and retrain it

- Connect the chessboard reading code with the model that executes the chess move

Besides that, I want to use the early mornings of the data collection days — while I’m still fresh — to get some reading done.

The biggest bottleneck in data collection last week was post-processing: recording a 15s episode took 30–40s to encode immediately afterward. This slowed down the loop significantly.

I updated the LeRobot code so that image-to-video processing now happens in batch after recording is complete. This change should halve the time per episode — if not more — and make the entire data collection process significantly faster.

I also read this substack on scaling laws for VLAs.

Meeting people for coffee and 4th of July

Friday, 4th of July

I met with friends visiting SF and a guy I know from the robotics hackathon in Paris who's also founding a company now. I also took some time off to celebrate the 4th of July.

Ok, it was time for a shitty day again

Thursday, 3rd of July

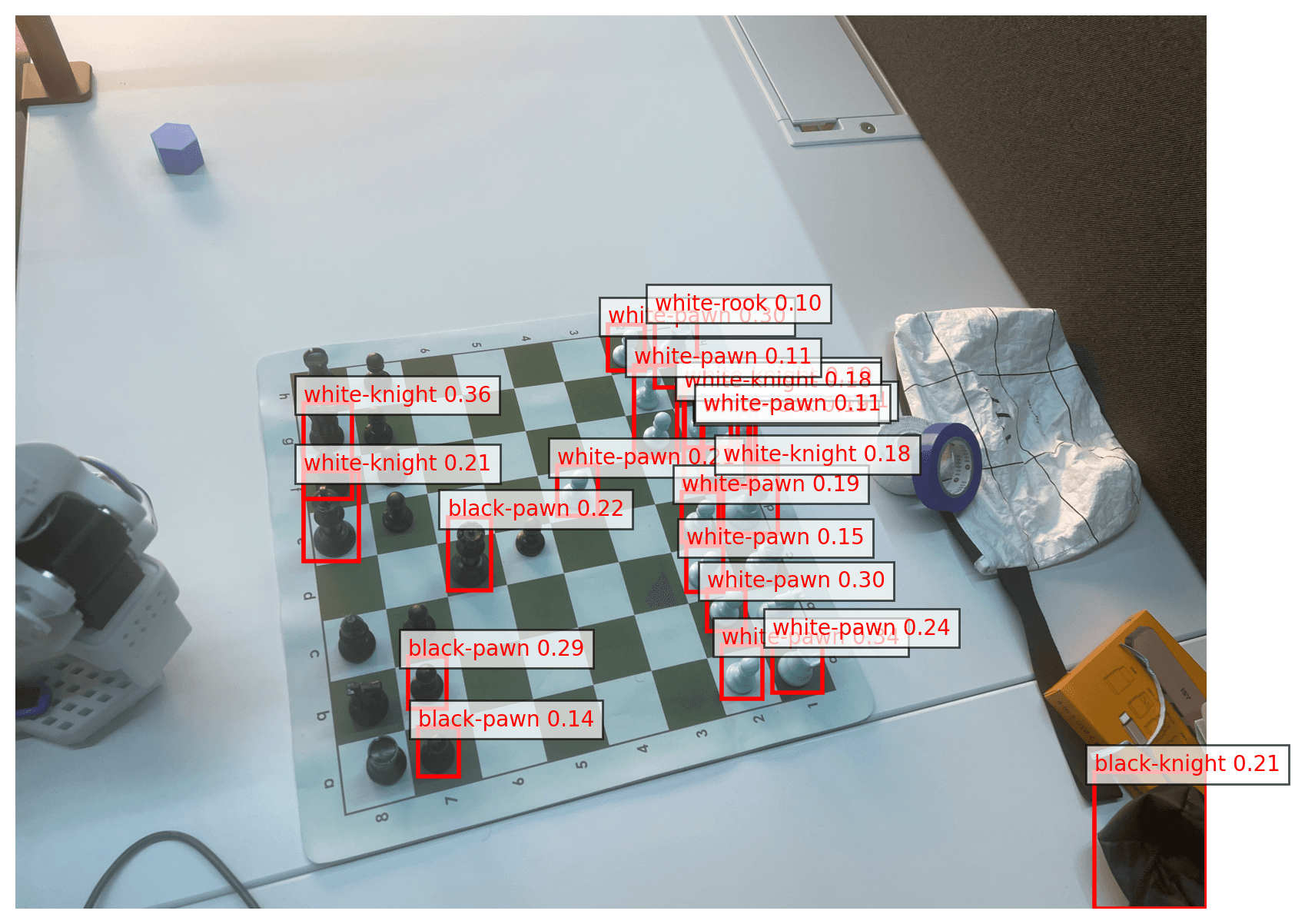

Today I kicked off training for an ACT and SmolVLA model. In the early evening, I tried out the first checkpoints and was disappointed. The 20k step checkpoint of the SmolVLA model doesn't work at all, and the 80k ACT model (this was the furthest checkpoint at the time) isn't much better.

SmolVLA model trained for 20k steps at a 8 sample batch size:

ACT model trained for 80k steps at a batch size of 8:

I need to wait for the final results, but I'm not quite sure what’s best to do if the final models aren't better tomorrow. I'm gonna think out loud:

- First, I should do a systematic evaluation of the checkpoints to understand:

- Which of the two models is working better, and is it by a clear margin?

- Does longer training (later checkpoints) lead to better results? → If yes, then train a model beyond 100k steps.

- The 100 samples where I only move the rooks could be confusing to the model. Does a model trained without this data do better?

- I could train another 500 episodes, train on the joint dataset, and see if this improves the model.

- I could do a literature review on how others have trained imitation learning models and see if there are any tricks to get this done.

Ok, I just revisited this video I had seen 1–2 months ago of someone else who built a chess robot. I noticed the description saying it’s trained on 1500 episodes! I currently have 500 — or 400 if you only count episodes from chess games — so maybe I just need to collect more data. The robots in the video have a better setup:

(1) The robots are in a fixed position relative to the chessboard, and

(2) Their camera is pointing at the pieces from below, such that the pieces are in view from the beginning.

Maybe the solution really is just collecting more data.